INTRODUCTION

In pharmacoeconomic analysis, we oftentimes incorporate data from external sources to inform the parameters of our economic models. It’s not uncommon for us to have some degree of uncertainty surrounding the values of our parameters. The size and influence of each uncertainty may influence our conclusions, which can significantly change based on a single parameter. For instance, if a new drug (Drug A) entering the market had an incremental cost-effectiveness ratio (ICER) that was greater than the stakeholder’s willingness-to-pay (WTP) threshold when compared to standard of care (Drug B), lowering Drug A’s price may move the ICER to below the WTP threshold (Figure 1). This could change the conclusion of the study from “Drug A is not cost effective compared to Drug B,” to “Drug A is cost effective compared to Drug B.” Of course, this depends on where the WTP threshold is located on the cost-effectiveness plane, but it highlights the importance of understanding the influence of a single parameter to a pharmacoeconomic model’s conclusion. This type of analysis to understand the influence of a single parameter on the model’s conclusion can be illustrated using a one-way sensitivity analysis.

Figure 1. Example of the incremental cost-effectiveness ratio (ICER) changing due to a change in the price of Drug A.

Multiple one-way sensitivity analyses can be performed and arranged in a way to visualize the parameters with the greatest influence on the study’s outcome using a tornado diagram. The tornado diagram arranges the impact of each one-way sensitivity analyses from largest (top) to smallest (bottom) on the chart (Figure 2).

Figure 2. Example tornado diagram.

You can perform multiple one-way sensitivity analysis and arrange them in a tornado diagram using Excel’s Visual Basic Application (VBA) macros. In this tutorial, you’ll be able to create a series of VBA macros to conduct one-way sensitivity analyses and visualize these as a tornado diagram.

MOTIVATING EXAMPLE

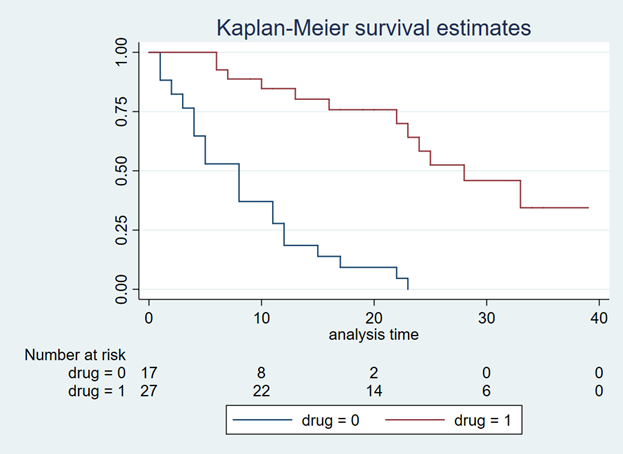

We will use a hypothetical decision tree model to perform a series of one-way sensitivity analyses. You can download the Excel file and high resolution images for this tutorial from the GitHub repository for Decision Tree Tutorials here. The example decision tree and its parameters are illustrated in Figure 3.

Figure 3. Decision tree and relevant parameters.

The base-case ICER comparing Drug A to Drug B is $5875 per one additional Cure achieved (Figure 4). The ICER is located in the Northeast quadrant.

Figure 4. Results from the decision tree and cost-effectiveness plane.

We will create a macro that will replace the Base-case value with the LL and UL values (lower limit and upper limit, respectively) for every parameter and re-calculate the ICER each time.

Here is what the final one-way sensitivity analysis table will look like after the macros are run (Figure 5). Notice how the Number column is not in ascending order. That’s because this is ordered by the Spread column, which is the absolute difference between the UL_ICER and LL_ICER. We sort by the Spread because it allows for the tornado diagram to place the parameter with the greatest influence on the base-case ICER at the top and the parameter with the least influence at the bottom.

Figure 5. Results from the one-way sensitivity analysis.

We will create three macros. The first macro will sort the Number column in ascending order. This helps with the one-way sensitivity analysis calculations. The second macro will perform the one-way sensitivity analyses by replacing the Value with the LL or UL values. The last macro will sort the Spread in descending order for the tornado diagram.

Note: In this tutorial, the tornado diagram is already generated and mapped to the data on the one-way sensitivity analysis table. I wrote a tutorial on how to create a tornado diagram, which can be accessed here.

To create and edit macros, you will need to make sure that the Developer tab is available on the ribbon panel (Figure 6).

Figure 6. Developer tab on Excel’s ribbon panel.

In Excel, go to the File > Options. In the Options window, select Customize Ribbon and check the box next to Developer in the Main Tabs column (Figure 7).

Figure 7. Enabling the Develop tab in the Options window.

Once the Develop tab is enabled, we can begin to examine the macros. To open the VBA editor, go to the Develop tab and click on Macros as seen in Figure 8. There are several macros that we can see under the Macro window. Select the oneway_order macro and click on Edit. This will open the VBA editor where we can examine the macro.

Figure 8. Open the VBA editor.

The VBA editor has several important features.

In the left panel are the various macros and their location. The right panel contains the VBA code for the macro. The macro controls allow you run (“Play”), pause, or stop the macro.

Figure 9. VBA editor interface.

Note: Normally, we save our Excel file using the *.xlsx extension. However, when you have an Excel macro, you need to save the file as an *.xlsm extension. This will allow you to save your macros and enable them later.

VBA Macro 1 – Sorting the one-way sensitivity analysis table

Let’s examine the first macro: oneway_order

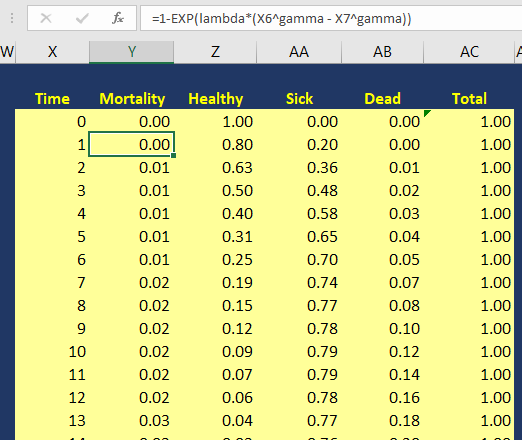

The VBA code first selects the sheet, which is labeled as OWSA model. Within the sheet, it selects the area defined by AM7:AV15 which is the one-way sensitivity analysis table in the Excel spreadsheet. The next part of the code orders the one-way sensitivity analysis table in ascending order based on the values in the Number column which is defined as AM8:AM15.

Note: Since this is on the same worksheet, we can leave the label of the active sheet as OWSA model. But if you were using different worksheets, you need to make sure to change the active sheet’s name.

The second part of the code tells the VBA macro to sort according to columns and that the first row contains the headers or column labels.

Figure 10. VBA Macro 1 – sort by ascending order the one-way sensitivity analysis table.

VBA Macro 2 – Re-calculate the ICERs with the lower and upper limits for each parameter

The second macro performs the one-way sensitivity analysis, and it is labelled as oneway_macro. Let’s take a close look at the VBA code for this macro. Notice how there is a low and high component in this macro. This is due to the LL and UL on the parameters table in the decision tree model. The oneway_macro will replace the base-case values with the value from the LL and UL columns in the decision tree model. This will need to be repeated for each parameter. In this decision tree model, there are 8 parameters that we will perform the one-way sensitivity analyses on. For the VBA macro, we will call these 0 to 7.

Figure 11 provides a close inspection of the macro and its elements in relation to the decision tree model in Excel. Notice that this is a loop function. The loop will start at 0 and end at 7 iterations, which is a total of 8 iterations, the same number of parameters in the decision tree. In the VBA macro, this is initiated with For low = 0 to 7 and then repeats with Next low until it reaches 8 iterations.

By changing these parameters with the LL and UL values, the ICER will be re-calculated. Once recalculated, we can update the one-way sensitivity analysis table with the new ICER value. In the VBA macro the re-calculated ICER is in cell AI7, and its value is imputed into the one-way sensitivity analysis table starting at cell AT8.

One the VBA macro completes, all 8 parameters will have undergone the one-way sensitivity analyses, and the re-calculated ICERs would be imputed into the one-way sensitivity analysis table; the LL_ICER and UL_ICER columns will contain the re-calculated ICERs for the LL and UL values from the parameters table.

Figure 11. VBA macro to perform series of one-way sensitivity analyses.

VBA Macro 3 – Re-sort the one-way sensitivity analysis table using the “Spread” in descending order

Once the ICERs have been re-calculated using the LL and UL from the parameters table, we can estimate their influence by calculating the absolute difference between the UL_ICER and LL_ICER. We want the absolute difference because we’re interested in visualizing the difference with the low and high values from each parameter.

Here is what VBA Macro 3 looks like:

This is very similar to the VBA Macro 1, which orders the one-way sensitivity analysis table based on the Number column. The only difference is that instead of using the Number column, VBA Macro 3 will sort in descending order the one-way sensitivity analysis using the Spread column, which contains the absolute difference between UL_ICER and LL_ICER.

Let’s take a look at the VBA macro closely (Figure 12).

Figure 12. VBA Macro 3 – re-order the one-way sensitivity analysis table by “Spread.”

The macro first selects the one-way sensitivity analysis table (AM7:AV15). It then selects the Spread column to sort in descending order (AM8:AM15). Once sorted, the macro will end.

Note: You may notice that the Order:=xlAscending option may sound counter-intuitive since we are sorting by descending order. The reason why the option has this in xlAscending order is because the tornado diagram uses a reverse axis on the Format Axis option (see Figure 13).

Figure 13. Format axis option to have the categories in reverse order.

Assigning macros

Once the one-way sensitivity analysis table is completed, we can assign these macros to buttons on the worksheet.

We can create buttons using the Shapes function in Excel. Right-click on the shape and select Assign macro. Create three of these. Figure 14 illustrates the steps to assign macros to each button.

Figure 14. Assigning macros to buttons in Excel.

We will assign oneway_order macro to the first button (Figure 15).

Figure 15. VBA Marco 1 assigned to the “Step 1” button.

We do the same thing for VBA Macro 2 (Figure 16).

Figure 16. VBA Macro 2 assigned to the “Step 2” button.

Lastly, we assign the final VBA macro to the “Step 3” button (Figure 17).

Figure 17. VBA Macro 3 assigned to the “Step 3” button.

Final steps

One all the buttons have been assigned to a VBA macro, we can click on each one in order to repeat the steps of re-calculating the ICER and updating the one-way sensitivity analysis table.

We can also see the changes to the tornado diagram (Figure 18).

Figure 18. Final tornado diagram.

At this stage of the tutorial, it’s good practice to inspect your entire Excel file to ensure that no data was accidentally lost or erased during the creating of the macros. Sometimes, if we select the wrong cells, we can overwrite our data. It’s good practice to save different versions of the Excel file so that you can return to your previous work in case something goes wrong.

Conclusions

Excel provides a power tool to generate VBA macros to perform functions iteratively. We leveraged this tool to perform a series of one-way sensitivity analyses which we then used to create a tornado diagram. Since this tutorial used a single worksheet, the process is much easier to code and diagnose. However, as we expand and create more worksheets in our Excel file, we need to be careful to select the proper sheets when using these VBA macros.

Acknowledgements

I used templates of previous one-way sensitivity analyses to build these VBA macros in this tutorial. Admittedly, it has been years since I first began doing this in Excel, and I don’t recall the sources. However, I wanted to acknowledge them as they deserve all the credit for helping me understand and apply these tools to building pharmacoeconomic models. If I find out the sources of these macros, I will post them here. I do know that my courses at the University of Washington was a source for some of the VBA macros for constructing pharmacoeconomic models, and I wanted to acknowledge their work and materials.

References

You can download the Excel file(s) from the GitHub Repository on Decision Trees Tutorials (link).

Disclaimers

This is a work in progress, therefore, there may be updates in the future.

This is for educational purposes only.